Two more new GenAI things I’ve been trying out over the last week are Mistral’s Le Chat chatbot and Gemini’s new memory feature. One has been a big hit; the other not so much. Le Chat is an open source model from the French company Mistral. Mistral have been punching way above their weight for as long as I’ve been following AI models.

Le Chat has quickly become a favorite. I had previously used it just now and again within the Poe app that offers access to 50+ models. The news that it had released an Android app caught my eye. I installed it on my phone, liked the first several interactions with it, and then added its web app to my favorites on the Arc browser.

The first thing that struck me about Le Chat was how fast it responds. That’s still striking to me after using it quite a lot every day for the last 8 days. Its free version has stood up very nicely against the latest models from Gemini, ChatGPT, and Perplexity - all paid pro versions. Its knowledge cutoff date is October 2023, but it can search the web so I haven’t noticed that impacting the quality of its responses so far.

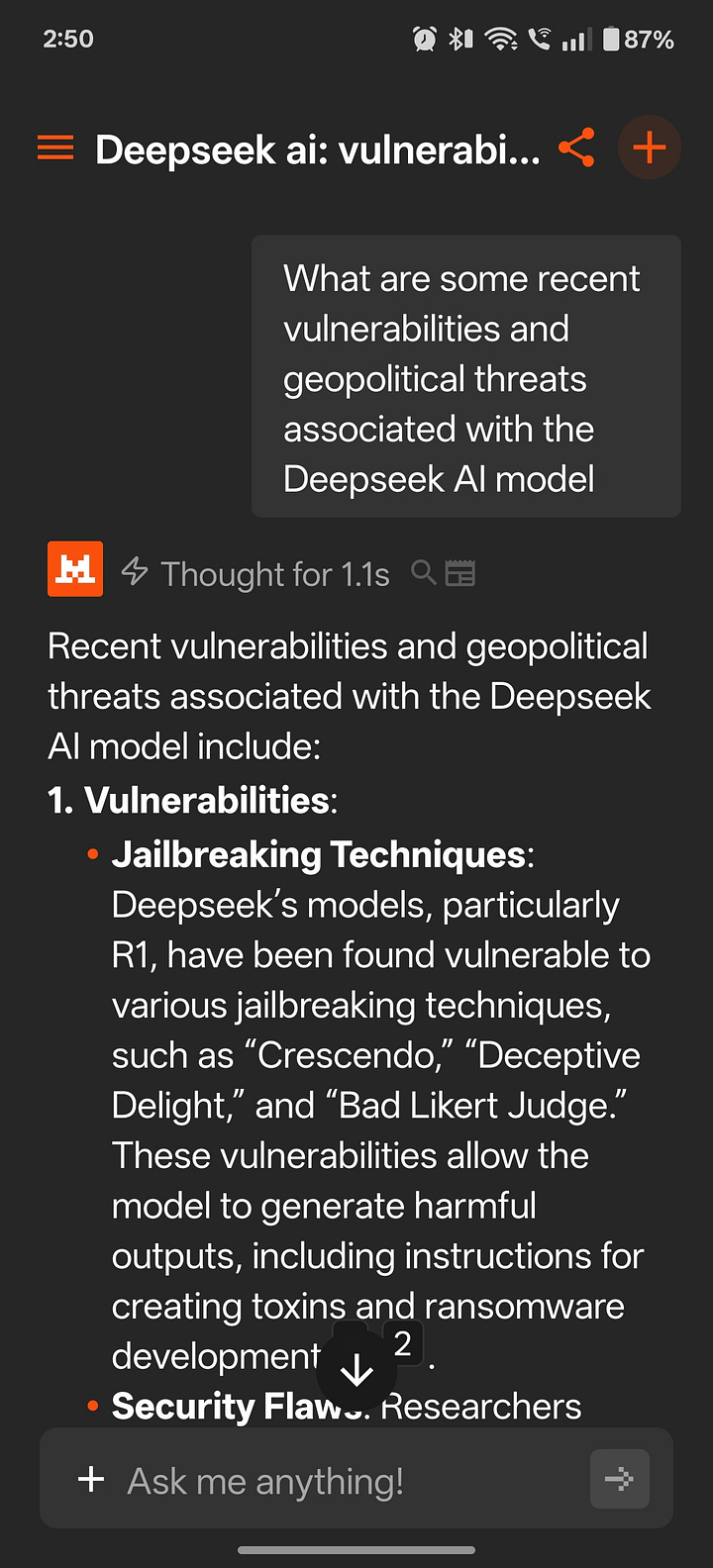

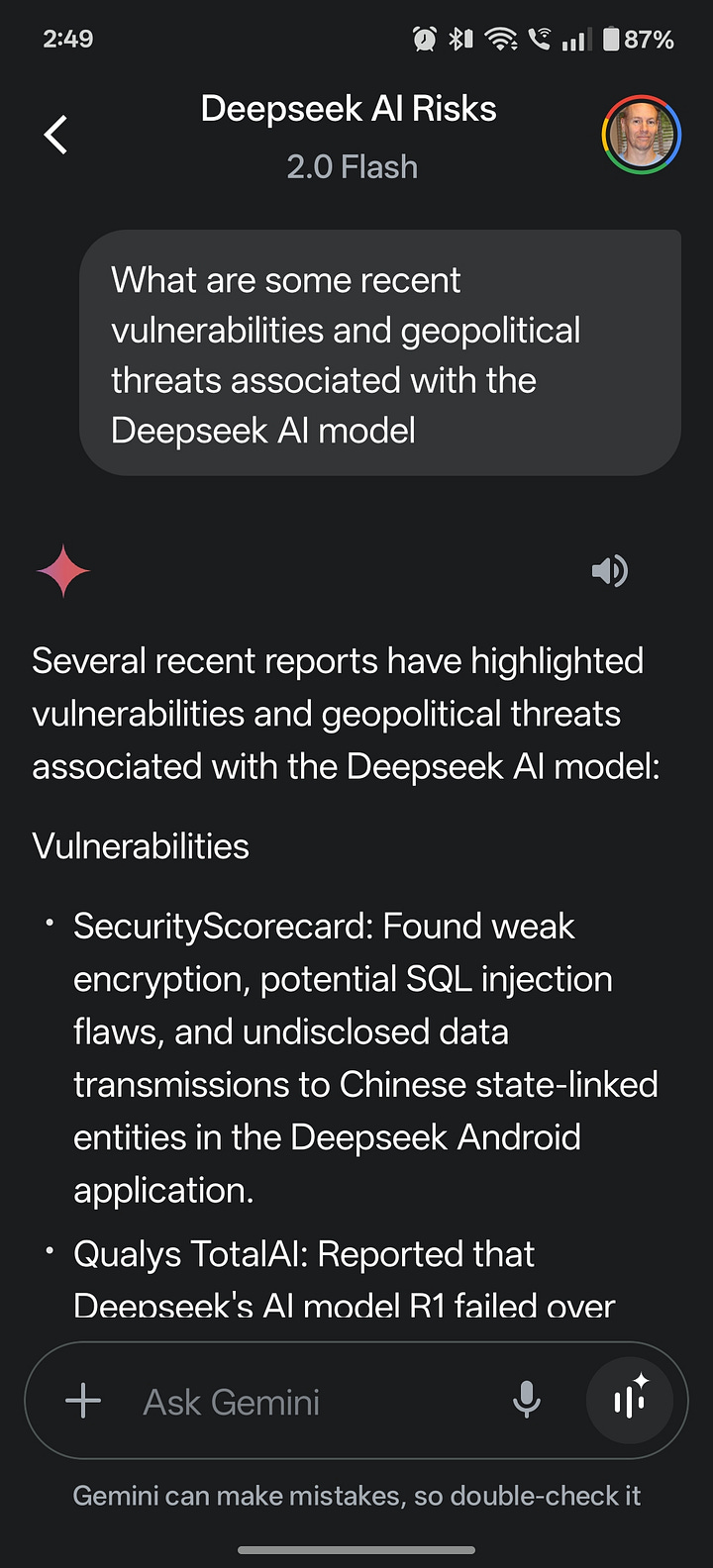

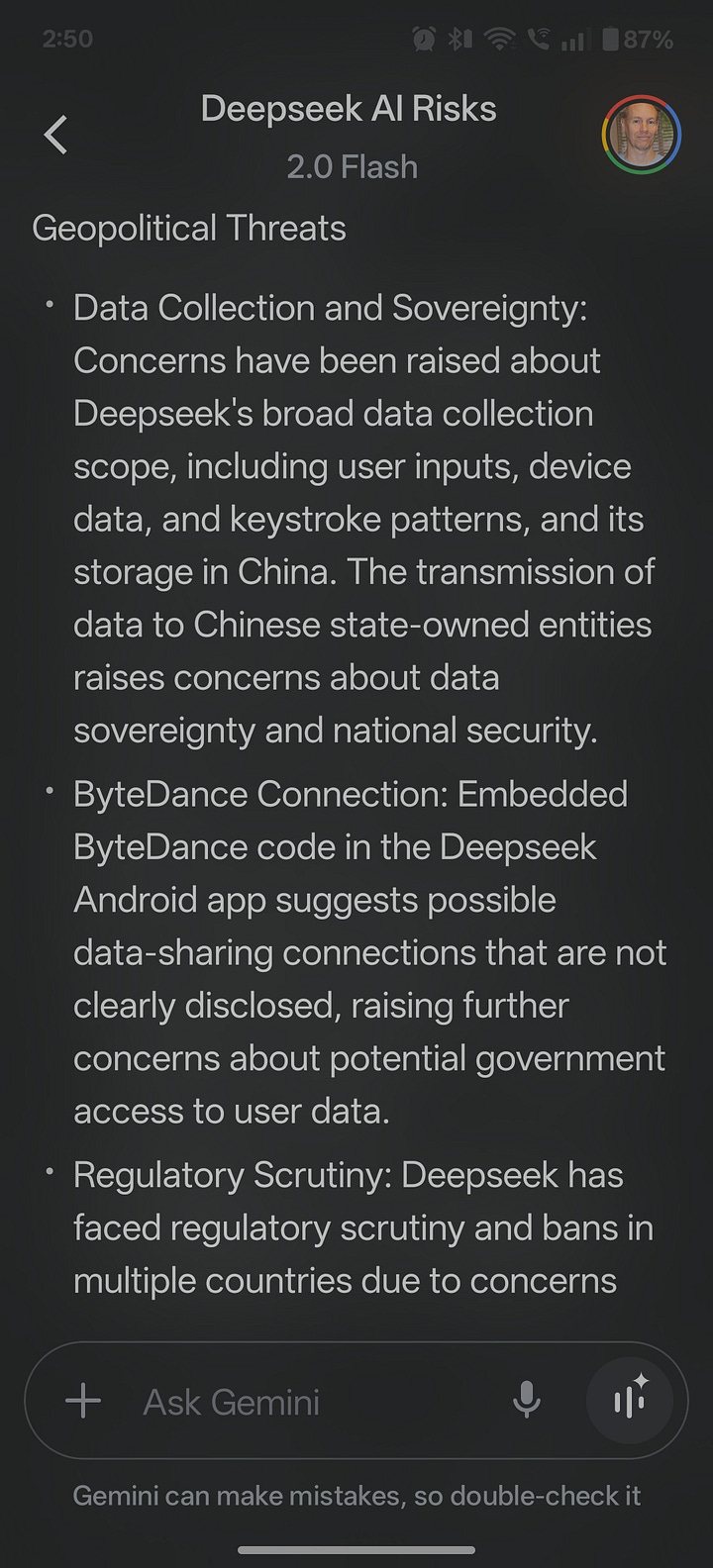

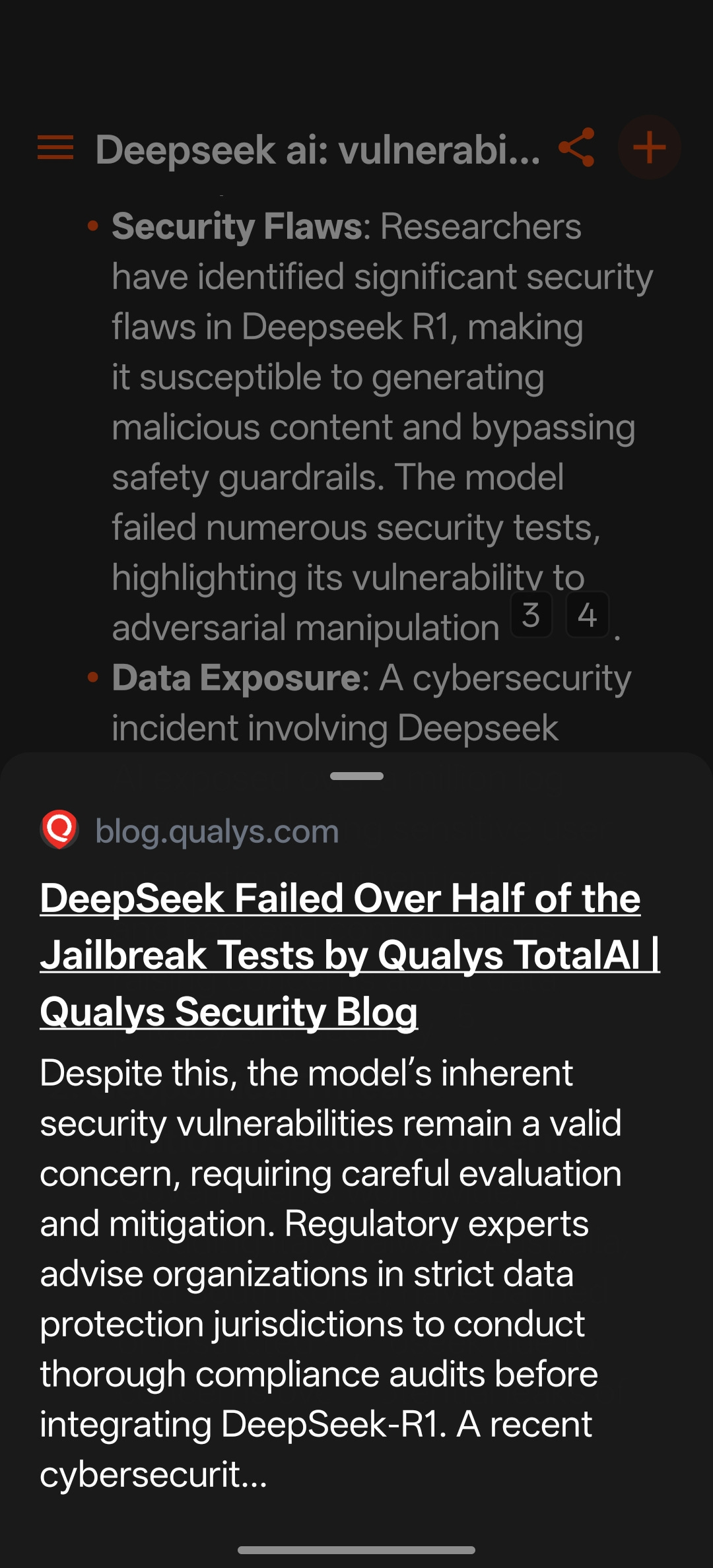

Today I did a little head-to-head with Le Chat vs Gemini 2.0 Flash on two prompts. The first topic was the internet famous (and some would say game changing) Deepseek R1 model, and its quickly discovered vulnerabilities and geopolitical threats associated with it (rightly or wrongly). The prompt was:

What are some recent vulnerabilities and geopolitical threats associated with the Deepseek AI model

Here’s what the two tools had to say about that, Le Chat response on top and Gemini below:

Le Chat’s response was nearly twice the length of Gemini’s and it went into a lot more detail around Deepseek’s vulnerabilities. Gemini does not list its sources; Le Chat shows them as little numbers inline, with a nice popup summary when you hover over them.

The second topic was Asteroid 2024 YR4, which has been in the headlines recently for not not geopolitical reasons :) My prompt was a simple one again for this topic:

Summarize the threat level and likely collision locations for 2024 YR4

Here’s the responses, Le Chat on the left, Gemini on the right:

The responses were almost identical length on this one, and equally good as well. They both got the Torino Impact Hazard Scale number right and the likely collision locations too. Both responses were quick - Gemini 6 seconds to finalize response and Le Chat 5 seconds.

So that’s my AI Hit for the week: Le Chat. It’s been added to my home screen, and I’ve been impressed enough to subscribe to the Pro version for $15 per month.

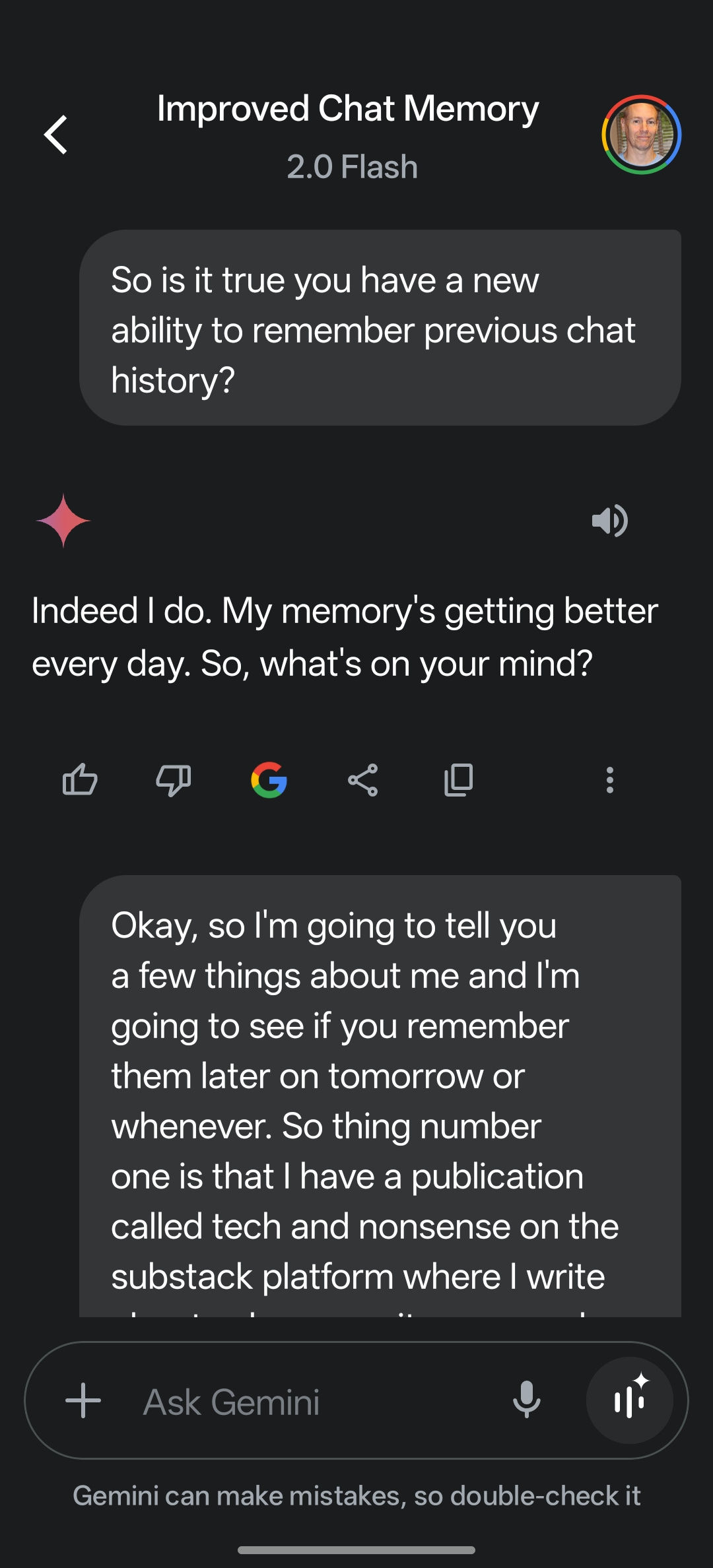

The AI Miss for the week is Gemini somewhat long-awaited Memory feature - the ability to remember details from previous chats. Full disclosure: I only tried this with two chats, but the results were epic enough fails to leave me in no great rush to try it again.

I first confirmed that the rollout of the memory feature had reached my phone:

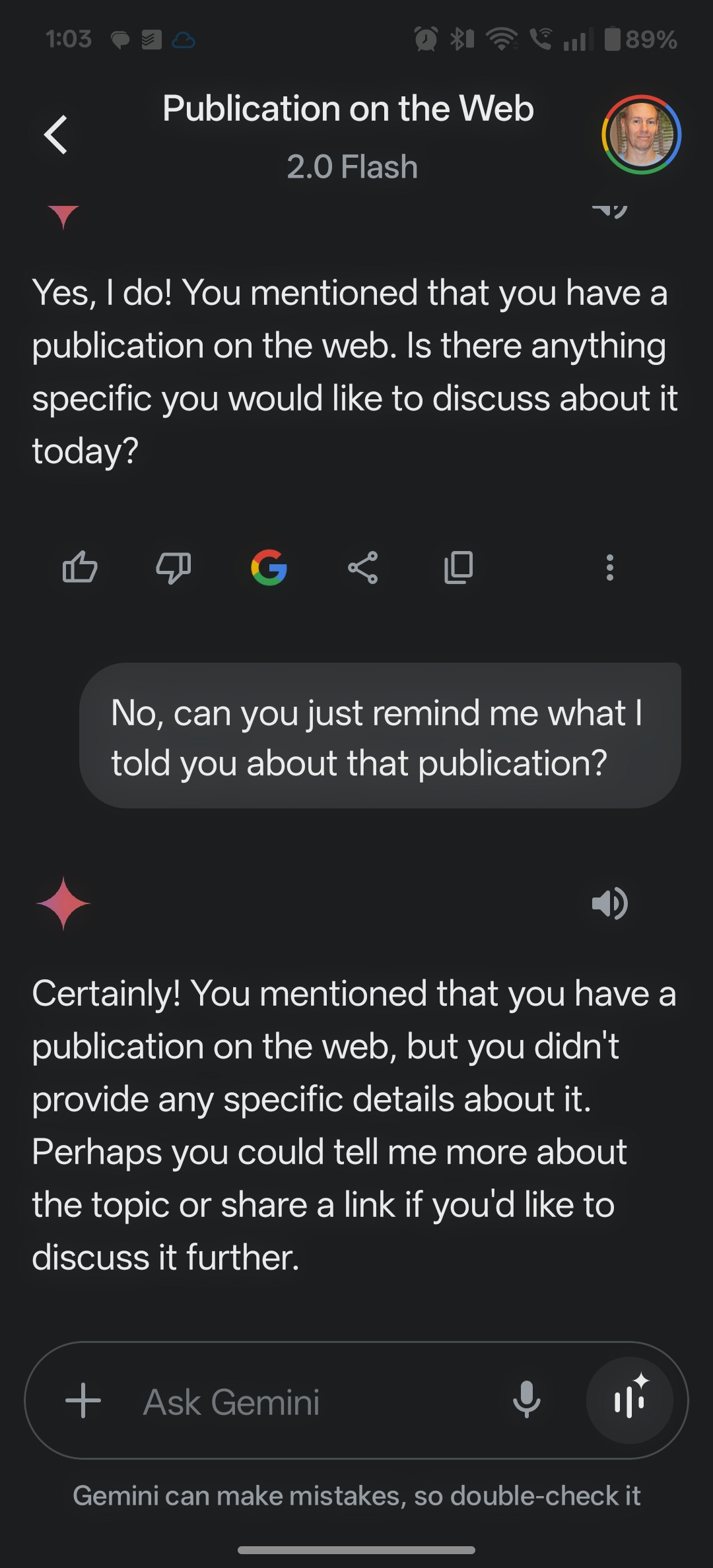

Then I had one chat where I told Gemini that I have this Substack publication and listed a few of the topics I write about. A day later Gemini did not even remember the platform, much less writing topics.

The second chat I had was about my dog. I told Gemini that her name is Lucy and she is a Shepherd / Border Collie mix. Here’s what Gemini said the next day:

I know that the Gemini models are improving, but there are times like this where it feels a little like Charlie Brown, except nobody is pulling the football away at the last moment.

Is Le Chat’s logo a pixelated … cat ?! I inherently like/trust/prefer work from smaller companies. Especially Google, I just don’t trust them anymore. I don’t think i could tell you exactly why; used to be the opposite. I noticed recently ChatGPT will throw in a UX message ‘memory updated’ I assume the context is just within that chat?

So... how many AI models do you currently subscribe to? ;-)